In machine learning speed is a feature: Introducing Fwumious Wabbit

We are announcing open source release of Fwumious Wabbit, an extremely fast implementation of Logistic Regression and Field-Aware Factorization Machines written in Rust.

At Outbrain we are invested in fast on-line machine learning at a massive scale. We don’t update our models once a day or even once an hour, we update them every few minutes.

This means we need very fast and efficient model research, training and serving. We’ve evaluated many options and concluded that Vowpal Wabbit is the most efficient tool out there in terms of cpu cycles. To the best of our knowledge this used to be the fastest online learning you could get.

Today we are introducing Fwumious Wabbit — a machine learning tool for logistic regression and FFM that is partly compatible with Vowpal Wabbit. Fwumious Wabbit’s main feature is simply speed.

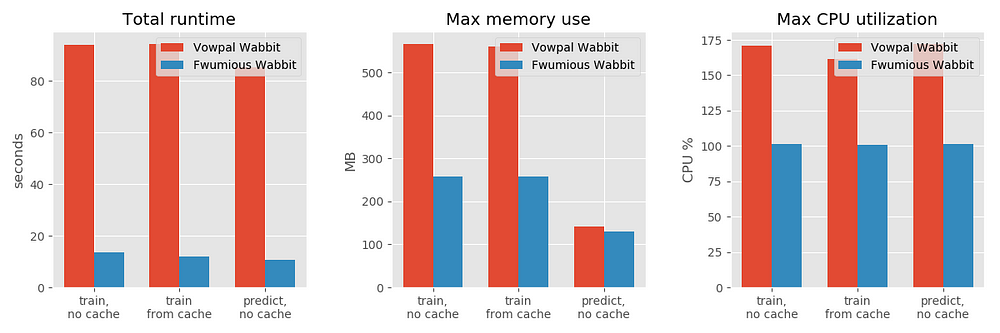

Here’s the comparison to Vowpal Wabbit on a synthetic dataset. Also in our tests Tensorflow on the CPU was an order of magnitude slower than Fwumious.

Fwumious uses a “cache” concept inspired by Vowpal Wabbit. It builds a specialized cache file on the first run over input data and uses it on all future runs over the same data. Like Vowpal it supports dynamic specification of feature combinations (for LR) and field-specifications (for FFM). This means that it is optimized for feature selection, feature combination and hyperparameter tuning.

It uses a single core and is thus suitable for doing a parallel search on each core of heavy-duty ML machines (for example 64). We are able to run a hundred complex models over hundreds of millions of records on a single machine in just a few hours.

The proof is in the pudding. Check it out at https://github.com/outbrain/fwumious_wabbit. The code includes a benchmarking suite you can run on your local machine.

Here is what makes Fwumious Wabbit fast:

- Only implements Logistic Regression and Field-aware Factorization Machines

- Uses hashing trick, lookup table for AdaGrad and tight encoding format for “input cache”

- Features’ namespaces have to be declared up-front

- Prefetching of weights from memory (avoiding pipeline stalls)

- Written in Rust with heavy use of code specialization (via macros and traits)

There are still more optimization opportunities like using AVX512, even more specialization, heavier use of on-stack fixed size data structures and possibly even AOT compiles for each run to specialize all the code.

At the very end, we’d like to thank the authors of Vowpal Wabbit for the inspiration and showing the path to very efficient CPU implementation of machine learning. Speed is a feature.